Feature

Optics in the Apollo Program

As part of the space race, optical scientists and engineers developed instruments and materials that steered the spacecraft, mapped the moon and brought back some of the 20th century’s most iconic images.

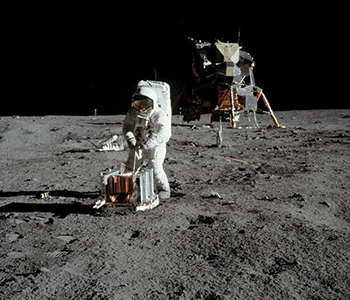

Apollo 11 astronaut Buzz Aldrin assembles a seismic experiment on the moon. The surface stereo close-up camera is behind Aldrin. [NASA]

Apollo 11 astronaut Buzz Aldrin assembles a seismic experiment on the moon. The surface stereo close-up camera is behind Aldrin. [NASA]

Fifty years ago this month, U.S. astronaut Edward White stepped out of his spacecraft, Gemini IV, to take the first “spacewalk” by an American, an event captured in some famous photos by his crewmate, James McDivitt. The Gemini program—which explored astronaut endurance on long missions, docking and navigational techniques, and other fundamentals for long-term space travel—marked a key proving ground for the techniques and equipment used in the Apollo program, which achieved the first manned lunar landing only a few years later. And, while rocket fuel and liquid oxygen may have propelled the crews to the moon, optics formed an integral part of the great adventure.

…Log in or become a member to view the full text of this article.

This article may be available for purchase via the search at Optica Publishing Group.

Optica Members get the full text of Optics & Photonics News, plus a variety of other member benefits.