[Image: TUM]

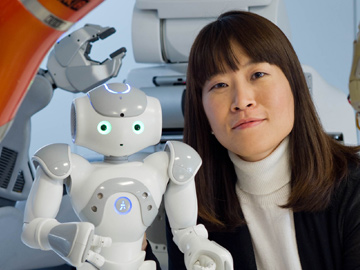

This June, at the OSA Imaging and Applied Optics Congress in Munich, Germany, Dongheui Lee of the Technical University of Munich (TUM) will give a plenary talk about “Robot Learning from Human Guidance,” and the role of so-called imitation learning in the roboticist’s toolkit. OPN caught up with Dongheui recently to learn more about the topic.

OPN: What sets imitation learning apart from other machine learning categories in robotics?

Dongheui Lee: Imitation learning is a core and fundamental part of human learning. Simply speaking, imitation learning can be understood as learning a new skill by imitating the skill of the other. For example, when we learn a new skill, we often imitate the skill of experts. Imitation learning requires and relies on an expert’s demonstrations.

In contrast to imitation learning, reinforcement learning—another machine learning category—teaches a new skill by trial-and-error with a goal of maximizing the resulting reward. In reinforcement learning, expert demonstrations are not given to the system, instead the rewards for the robot’s policy (action) are provided as training data. When a robot takes a good action, it gets a reward. When it takes a bad action, it receives a penalty. Reinforcement learning tries to find an optimal solution by finding a balance between exploration and exploitation.

How does imitation learning expedite robot learning?

Reinforcement learning can suffer from poor sample complexity on challenging tasks, including high-dimensional tasks. These limitations can be reduced when the strategy is combined with imitation learning. When starting with imitation learning as an initial policy, a robot’s learning speed increases dramatically. It is like starting with a suboptimal solution and working to improve its policy further by exploration and exploitation.

Imitation learning can allow robots to learn efficiently, compared with other machine-learning algorithms. Furthermore, imitation learning allows general human users, with no knowledge on robotics and computer programming, to program robots intuitively.

How does this work? What are the learning inputs?

[Image: Getty Images]

Learning controllers in robotics can leverage upon different modalities and learning strategies. One is visual observation of human motions, which can be exploited in imitation learning. In this approach, 3-D motion capture systems can be used for mimicking the user’s motion to a robot—which is particularly convenient in the case of humanoid robots. The overall structure consists of three components: human motion measurement, motion mapping from a human to a robot, and motion control.

Physical interaction provides a natural interface to kinesthetic transfer of skills as well. Here, the human user can demonstrate or refine the task in the robot’s environment, while feeling its capabilities and limitations. The recent hardware and software developments toward compliant and tactile robots (including variable stiffness actuators, back-drivable motors and artificial skins) make kinesthetic teaching a promising teaching modality for the user.

Besides these learning modalities, other strategies have been utilized for teaching a robot. Teleoperation systems enable the human subject to feel the robot’s interaction with the environment, and that feeling can be exploited to learn force profiles for a desired task. Voice commands and oral feedback can also be integrated in the loop of learning control.

Finally, reinforcement learning enables a robot to autonomously discover an optimal behavior through trial-and-error interactions with its environment.

What types of optical sensor systems help enable this kind of robot learning?

Imitation learning involves a lot human interaction and human movements. Human motions can be measured by different types of systems, such as marker-based optical motion capture, IMU (inertial measurement unit) based motion capture, markerless optical motion capture, and time-of-flight sensors.

Numerous techniques exist for human motion measurement, and many commercial motion capture systems are available on the market, such as Vicon. Different systems have different strength and weakness in terms of accuracy, mobility (spatial area for motion capture), and ease of use—for example, with some of these approaches, you need actually to attach markers or IMU sensors to the body.

In robotics, RGB-D cameras have been widely used since Microsoft released its Kinect sensors. However, these aren’t robust in outdoor environments. For drones, outdoor robust and lightweight optical sensors are desirable.

Recently, makers of humanoid robots have used fiber optic gyroscope sensors, to estimate the precise state of humanoid robots without a sensing delay. But those can be very expensive.

What are the limits of imitation learning in robotics? Are there certain complicated tasks or body movements that are difficult to teach using this model?

Observing human movements and copying them doesn’t always ensure that the robot will achieve the task at hand. This is the same with humans, of course—just watching another’s actions does not guarantee that your own motor skills will improve. Watching someone’s skillful driving does not immediately give us the skill to drive. When the tasks are complex and highly dynamic, one has to practice by himself/herself, until the motor skills are fully embodied.

What are you working on right now in your efforts at TUM and the German Aerospace Center DLR?

At DLR, my team members and I are currently working on future applications for factory and surgical robots. The common goal is the development of assistive robots that can coexist and interact with humans. For that, a robot needs to be able to estimate humans’ activities and intentions, and decide how to behave accordingly. This requires symbolic reasoning, trajectory planning and physical interaction control.

Another recent project with my TUM team members is the effect of light-touch haptic interactions on human balancing. It is known that light touch in a static environment helps a human subject to stabilize his or her balance. Also, interpersonal light touch of two human subjects has a similar effect. We are now investigating a robotic solution for light touch support during locomotion in balance-impaired humans.