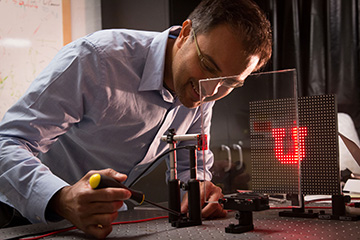

Rajesh Menon, University of Utah, with setup that uses a pane of glass and a CMOS sensor to create a lensless see-through camera. [Image: Dan Hixson/University of Utah College of Engineering]

A research team at the University of Utah, USA, has demonstrated a prototype of a lensless, see-through camera that uses computational optics to reconstruct images passing through an ordinary window pane (Opt. Express, doi: 10.1364/OE.26.022826). While the proof-of-concept system’s image resolution is on the primitive side, the researchers believe that adding more powerful sensors to the mix could boost quality significantly. And they say that the approach could eventually find use in augmented reality (AR), autonomous vehicles and other “applications where form factor is important.”

Images without lenses

The notion of cameras that reconstruct images computationally, without the use of bulk lenses, is not new. Previous demonstrations, however, have often involved complications such as a requirement for coherent illumination, coded apertures in front of the image sensor, or microlens arrays. In such cases, the nominally lensless imaging elements can obscure at least part of the observer’s field of view. And other approaches developed for AR such as wedge optics and luminescent concentrators, while ostensibly transparent, can still limit light transmission through the imaging system in various ways.

OSA Fellow Rajesh Menon and colleague Ganghun Kim wanted to see if they could fashion a truly see-through camera that did not require a lens. To do so, they built on work published last year that involved computationally reconstructing an image from a bare CMOS image sensor (Appl. Opt., doi: 10.1364/AO.56.006450).

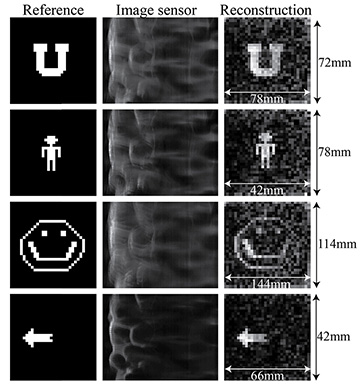

In that work, light scattered from the glass cover on the sensor and imaged by the sensor results in a blobby, seemingly formless pattern on the sensor. That pattern, it turns out, gives the space-variant point-spread function (PSF) of the scattered light. An image of the original scene can then be “backed out” of the PSF by computationally solving the mathematical inverse problem representing the light-scattering process.

Sandpaper and reflective tape

To put such an approach to the test in a device that could be more relevant to AR applications, Kim and Menon began with a 200×225-mm sheet of plexiglass acrylic, with the edges blowtorched to make them smooth. They then roughed up a small patch of one of the window edges with sandpaper to provide a zone of scattering, and attached a conventional CMOS image sensor to the roughed-up patch. The rest of the window edge was covered with aluminum reflective tape.

When light from an object passes through this slightly souped-up window, a tiny fraction of the light falls on the roughed-up patch on the edge; that light scatters into the CMOS sensor and creates an image of the space-variant PSF. To computationally pull the original image out of that blobby PSF image, Kim and Menon began by measuring the PSF images created from point sources at different locations, to create a calibration matrix for the system. They could then, in principle, use that matrix recover the image of an arbitrary object from the PSF image using a standard linear inverse solving algorithm.

The original display (left column) is scattered to create a blobby point-spread function (center column) picked up by a CMOS image sensor embedded in the edge of the window. The original image is then computationally reconstructed from the point-spread function (right column). [Image: G. Kim and R. Menon, Opt. Express, doi: 10.1364/OE.26.022826] [Enlarge image]

From window to security camera?

Using this setup, Kim and Menon were able to reconstruct low-resolution images from an LED light board and a smartphone LCD display, and to demonstrate color and even video imaging. Even at its present resolution, the researchers believe that the system could be sufficient for some systems, such as obstacle-avoidance sensors on autonomous vehicles.

Longer term, Kim and Menon think that using window glass as a camera could find use in a wide variety of settings. Security cameras, for example, might be built directly and unobtrusively into a home’s windows during construction, and AR glasses and biometric scanners could be made sleeker and more compact by nestling the imaging hardware in the edges of the lens. Such applications will likely require higher-resolution sensors than the ones used in this proof of concept.

Still, Menon, in a press release, suggests that the work bears out his “philosophical point”—that designing cameras optimized for machines rather than humans can create new opportunities. “It’s not a one-size-fits-all solution,” he says. “But it opens up an interesting way to think about imaging systems.”