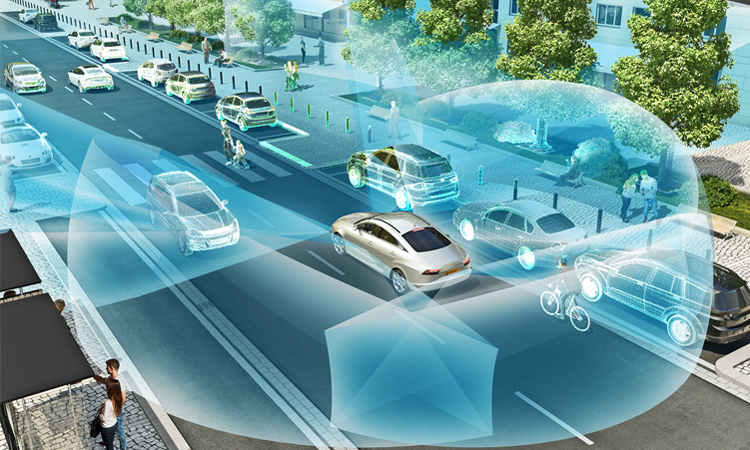

Emerging autonomous vehicles will combine lidar photon returns with data from other sensors to build a picture of their surroundings. [Continental Automotive]

Emerging autonomous vehicles will combine lidar photon returns with data from other sensors to build a picture of their surroundings. [Continental Automotive]

Autonomous cars are hot—a multi-billion-dollar business opportunity that could transform mobility. Beyond everyday use, self-driving cars could expand transportation options for the elderly and disabled and ease business travel by guiding drivers in unfamiliar locales. Perhaps most important, their use could reduce the deadly toll of traffic accidents by removing a primary cause: the proverbial “nut behind the wheel” who is prone to speeding, drinking or texting-while-driving.

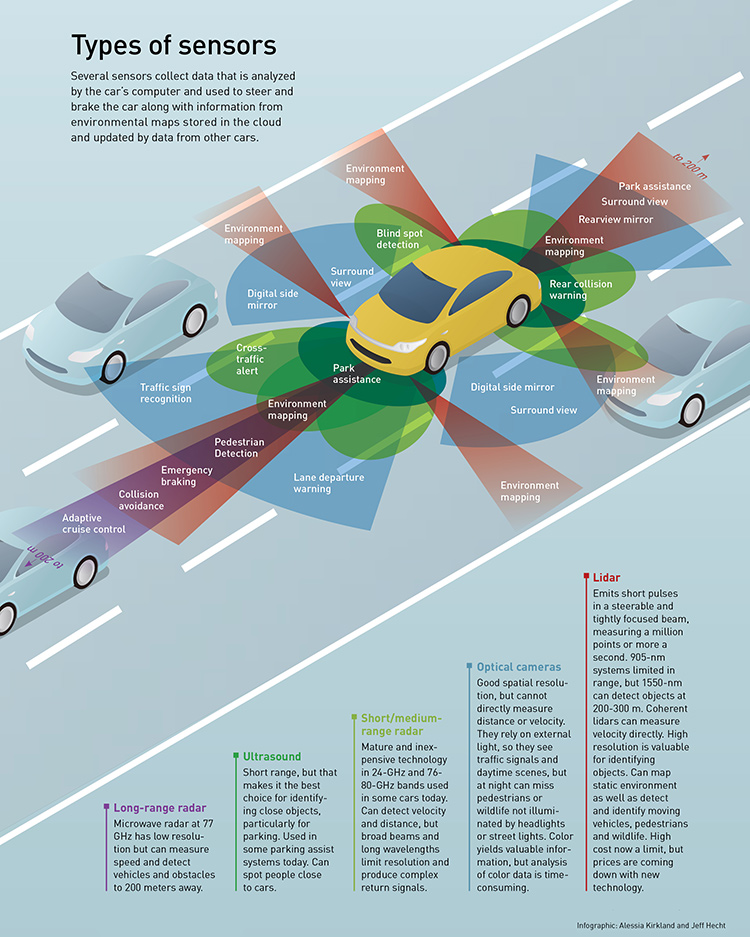

Achieving automotive autonomy requires artificial intelligence to process and integrate data from a suite of sensors including cameras, microwave radar, ultrasonic sensors and laser radar, better known as lidar. Each sensor in the suite has its strengths and weaknesses. Ultrasonics are good at sensing nearby objects for parking, but too short-ranged for driving. Cameras show the local environment, but don’t measure distance. Microwave radar measures distance and speed, but has limited resolution. Lidar pinpoints distance and builds up a point cloud of the local environment, but at present it has a limited range and a steep price tag. Extending lidar’s range, and reducing its costs, thus forms a key challenge for making autonomous driving an everyday reality.

Long-standing vision

The idea of self-driving cars is far from new. The General Motors Futurama exhibit at the 1939 New York World’s Fair envisioned radio-controlled electric cars driving themselves on city streets. In 1958 GM and the Radio Corporation of America tested radio-controlled cars on 400 feet of Nebraska highway with detector circuits embedded in the pavement. But the available technology was not up to the task.

The second edition of the autonomous vehicle “Grand Challenge” of the U.S. Defense Advanced Research Projects Agency (DARPA), in 2005, and the Urban Challenge two years later, revived interest by making the technology for self-driving cars seem within reach. That stimulated technology companies to jump in—notably Google, which launched its program in 2009. Major programs have followed at giant, traditional car makers including General Motors, Ford, and Toyota.

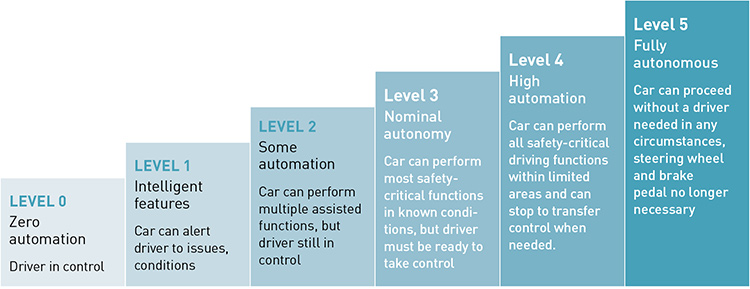

But what does “self-driving” really mean? The Society of Automotive Engineers (SAE) defines five levels of automation, beyond fully manual control (Level 0). These range from Level 1 (“feet off”) automation, typified by cruise control; to Level 2 (“hands off”), systems such as Tesla’s “Autopilot,” which assume the driver is poised to take immediate control; all the way to Level 4 (“mind off”), which can stop and turn over control when needed, and completely automated, Level 5 vehicles requiring no driver at all. Level 4 and Level 5 constitute the holy grail for autonomous-vehicle development—and also the most difficult targets to reach.

Five levels of autonomy: The Society of Automotive Engineers categorizes automated vehicles by increased levels of autonomy

Five levels of autonomy: The Society of Automotive Engineers categorizes automated vehicles by increased levels of autonomy

A suite of sensors

The difference between the SAE’s five levels is more than academic—a fact that came into tragic focus on 7 May 2016, when the driver of a Tesla Model S equipped with the company’s Autopilot and cruise control, traveling at 74 miles per hour, fatally smashed into a tractor-trailer on a divided highway in Florida. Neither the driver nor the car spotted the truck turning left across their lane in time to hit the brakes.

In theory, the Tesla’s forward-looking camera and microwave radar both should have spotted the truck when it was 200 meters away, far enough for Autopilot to brake safely. The radar may have missed it because the trailer was high enough above the ground to be mistaken for an overhead traffic sign, an object of no consequence. The monochrome camera, used to speed image processing, may not have discerned the difference between a plain white truck and a bright blue sky.

Top: Luminar co-founder Austin Russell in car with lidar images. Bottom: Luminar lidar records a point cloud image its surroundings; colors convey information such as distance to the objects observed. [Images courtesy of Luminar]

Top: Luminar co-founder Austin Russell in car with lidar images. Bottom: Luminar lidar records a point cloud image its surroundings; colors convey information such as distance to the objects observed. [Images courtesy of Luminar]

The National Transportation Safety Board concluded that—despite its potentially misleading brand name—Autopilot “was not designed to, and did not, identify the truck crossing the car’s path.” It was programmed to watch for vehicles moving in the same direction, not crossing the car’s path. It would only stop for stationary objects in the road if two sensors triggered warnings, and that didn’t happen. (Tesla, for its part, says it views Autopilot as a driver-assistance feature for a high-end car, not a truly autonomous driving system.)

A lidar with 200-meter range could have spotted the truck in time for a suitably programmed car to apply the brakes, says Jason Eichenholz, co-founder and chief technology officer of Luminar Technologies. At the time, car lidar ranges were limited to a few tens of meters, but in 2017 Luminar announced a 200-meter model, and this year it plans to deliver its first production run to automakers. With the notable exception of Tesla, most companies developing self-driving vehicles are looking seriously at lidar.

In fact, to achieve Level 4 or 5 autonomy, most vehicle developers envision a suite of sensors, including not just lidar but also microwave sensors, optical cameras and even ultrasound. (See infographic,below.) The car’s control system would combine and analyze inputs from multiple sensors, a process called “sensor fusion” that makes decisions, steers the car, and issues warnings or applies the brakes when necessary.

Reinventing lidar for self-driving cars

Laser ranging has been around since the early 1960s, when MIT’s Lincoln Laboratory measured the distance to the moon by firing 50-joule pulses from a ruby laser, with a return signal of only 12 photons. Most lidars still depend on the same basic time-of-flight principle. But the technology has come a long way.

The 2005 DARPA challenge inspired David Hall, founder of Velodyne Acoustics, to build a spinning lidar to mount on top of a vehicle he entered in a desert race. His vehicle didn’t finish the race, but he refined his spinning lidar design to include 64 lasers and sensors that recorded a cloud of points showing objects that reflected light in the surrounding area. His lidar design soon became part of the company’s business. It proved its worth in DARPA’s 2007 Urban Challenge, when five of the six vehicles that finished had Velodyne lidars spinning on top. The spinning lidars were expensive—about $60,000 each when Google started buying them for its own self-driving car experiments. But for years they were the best available. An enhanced version that scans more than two million points per second is still on the market, and Velodyne now offers smaller and less expensive versions with 32 and 16 lasers.

The Velodyne lidar was a breakthrough, but its performance was limited by its wavelength. Lidar makers use 905-nm diode lasers because they are inexpensive and can be used with silicon detectors. However, the wavelength can penetrate the eye, so pulse power must be limited to avoid risking retinal damage. This is not a big problem for stationary lidars, because averaging returns from a series of pulses can extend their range; a lidar firing 10,000 pulses can have a 300-meter range. But returns from moving lidars can’t be averaged because they fire only one pulse at each point. That limits their range to 100 meters for highly reflective objects and only 30 to 40 meters for dark objects with 10 percent reflectivity.

Lidar developers are introducing a new generation of more cost-effective lidars for automotive use.

Google worked around the lidar’s short range by limiting its self-driving tests to 25 miles per hour—slow enough for cars to stop within 30 meters when they used the lidar data on fixed and moving objects for navigation and collision avoidance. Data on stationary objects also went into Google’s mapping system, including data on where to look for traffic signals and signs.

Bringing down costs

For auto makers looking at production and margins, however, the prices of spinning mechanical lidars remain too high. So lidar developers are introducing a new generation of more cost-effective lidars for automotive use. To avoid the high cost of spinning mirrors, the new lidars scan back and forth across ranges typically limited to 120 degrees. That would require putting multiple stationary lidars pointing in different directions on the front, sides and back of the vehicle to build a 3-D point cloud of the surroundings. Many such systems measure distance by time of flight, but coherent lidars are also being developed.

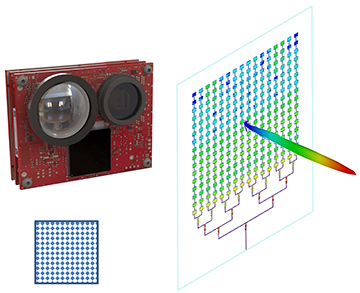

(Top) Quanergy S3 solid-state lidar inside view. (Lower left) Single-photon avalanche diode array, chip-to-chip attached to readout IC. (Lower right) Transmitter optical phased array Photonic IC with far-field radiation patte. [Courtesy of Quanergy]

(Top) Quanergy S3 solid-state lidar inside view. (Lower left) Single-photon avalanche diode array, chip-to-chip attached to readout IC. (Lower right) Transmitter optical phased array Photonic IC with far-field radiation patte. [Courtesy of Quanergy]

In January 2016, Quanergy (Sunnyvale, Calif., USA) announced plans to mass-produce a 905-nm solid-state lidar with a range of 150 meters at 8 percent reflectivity. Based on CMOS silicon, the lidar uses an optical phased array to scan half a million points a second with a spot size of 3.5 centimeters at 100 meters, says CEO Louay Eldada. Mass production was scheduled to start in November 2017. Single-unit samples are expected to sell for less than US$1,000, dropping to US$250 in large volumes.

In April 2017, Velodyne Lidar announced plans for a compact 905-nm solid-state lidar that the company says will scan 120 degrees horizontally and 35 degrees vertically and have a 200-meter range, even for low reflectivity. The 12.5×5×5.5-cm lidar modules are to be embedded in the front, back and sides or corners of the car. Velodyne says it will use “a frictionless mechanism designed to have automotive-grade mechanical reliability.” The price is expected to be hundreds of U.S. dollars.

Longer wavelength, longer range

Luminar Technologies started with ambitious goals and spent years in stealth mode, learning “two thousand ways not to make a lidar,” its co-founder Eichenholz says. “We want to have higher spatial resolution, smaller angles between beams, tighter beams, and enough energy per pulse to confidently see a 10 percent reflective object at 200 meters.”

The company started at 905 nm and quickly realized that, because of the eye-safety issue, that wavelength didn’t allow a high enough photon budget to reach the desired range. Luminar’s solution was to shift to the 1550-nm band used for telecommunications, which is strongly absorbed within the eye so that it doesn’t reach the retina. That natural safety margin allows the higher pulse power needed for a 200-meter range for dark objects—far enough for cars going at highway speed to stop safely if the lidar spots a hazard.

“The speed of light is too slow” for simple time-of-flight lidar, says Eichenholz. He wanted to scan more than a million points per second out to 200 meters, but light takes 1.3 microseconds to make the round trip. To overcome that time lag and get the desired point field, Luminar’s system has two beams in flight at once, with their interaction examined at the detector.

For scanning the scene, Luminar turned to galvanometers, which, instead of spinning, bend back and forth to scan up to 120 degrees horizontally and 30 degrees vertically. Eichenholz says that with galvos their receivers “look at the world essentially through a soda straw,” with a narrow field of view. That cuts down interference from sunlight, lidars in other cars, headlights and other sources. The receiver might pick up one glint, but the system discards that pixel and moves on to the next. The scanner also can zoom in on small areas to see fine details.

Luminar is gearing up its Orlando factory to begin production of its first 10,000 lidars this year.

FM continuous-wave lidar

Strobe Inc., a start-up in Pasadena, Calif., USA, that was bought in October by General Motors, has chosen the same wavelength, but a different approach. Instead of firing laser pulses, Strobe’s lidar-on-a-chip applies sawtoothed frequency chirps to the output of a continuous-wave laser. When the reflected chirped signal returns to the lidar, it mixes with an outgoing chirped beam in a photodiode to produce a beat frequency, says Strobe founder Lute Maleki. The frequency modulation and mixing with a local oscillator make the system a coherent lidar, which is largely immune to interference.

For stationary targets, the beat frequency gives the range. For moving targets, the mixed signals give two frequencies that directly yield the object’s velocity as well as distance. Time-of-flight lidars must calculate velocity from a timed series of distance measurements. “Getting speed directly helps in lots of ways, cutting the processing time and giving you more information,” says Maleki.

Coherent lidar requires an extremely low-noise laser and an extremely linear chirp, both of which normally require large systems. What makes Strobe’s system possible is a self-injection-locked laser which combines a diode laser with a millimeter-scale whispering-gallery-mode resonator to give very low noise and very linear chirp. Developed by OEWaves, another company founded by Maleki, the system can be fabricated on a semiconductor chip. Using a 1550-nm laser and a PIN photodiode, it can detect 10-percent-reflective objects at 200 meters, and 90-percent reflectors out to 300 meters. Its high angular resolution lets it spot small objects like motorcycles out to that range. Scanning is done by MEMS mirrors, which are amenable to mass production at low cost.

Those features helped sell GM on the company. The Strobe lidar tolerates interference from sunlight that can blind cameras and humans, such as a low sun reflecting off pavement, and spot a person dressed in black walking at night on black pavement, wrote Kyle Vogt, CEO of GM’s Cruise division. “But perhaps more importantly, by collapsing the entire sensor down to a single chip, we’ll reduce the cost of each LIDAR on our self-driving cars by 99 percent.”

Coming to a showroom near you?

Coming to a showroom near you?

Timetables for the introduction of autonomous cars vary widely. The leader may be Fisker, which plans to show its all-electric EMotion equipped with five Quanergy lidar modules at the Consumer Electronics Show this month, with production of the $129,900 cars to begin in 2019. Ford has announced plans to offer “fully autonomous” cars in 2021, their target market being fleets of ride-sharing cars that spend 18 to 20 hours a day on the road. Eric Balles, the director of energy and transport at Draper Lab, says that the “value proposition” for such fleets differs greatly from that for personal cars used only an hour or so.

How autonomous we actually want our cars to be is also unclear. Level 2 (“hands off”) and Level 3 (“eyes off”) seem easier to achieve technologically, but how to hand over control from machine to human when required remains unclear. Levels 4 and 5 would require much better sensing and more artificial intelligence to make vital decisions, but they could offer major mobility benefits for an aging society. [Image courtesy of Fisker]

Expanding the possibilities

Other developers of chip-based lidars are turning to solid-state scanning with optical phased arrays. The Charles Stark Draper Laboratory, an independent nonprofit based in Cambridge, Mass., USA, that has worked on sensor fusion and autonomous vehicles, is developing a chip lidar that combines optical waveguides and MEMS switches. An array of MEMS switches couples light from the waveguides out into space. The individually addressable MEMS switches move only a fraction of a millimeter and they don’t reflect light.

Draper is working on both short-range (905 nm) and long-range (1550 nm) versions of their technology. The short-range version might scan 170 by 60 degrees, and be spaced around the car to cover the nearby environment; the long-range version might scan only 50 by 20 degrees, to cover the road and adjoining areas to 200 meters ahead, allowing for driving at highway speeds. Each switch is addressable, so the lidars could shift to higher frame rates to cover areas of interest, such as signs of motion along the roadside ahead. The lidars would work with each other and with other sensors to monitor the whole area.

Much of this work remains on the lab scale. Christopher Poulton and colleagues at MIT, for instance, recently reported the first demonstration of a frequency-modulated coherent lidar on an integrated chip with phased-array scanning—with a range of only two meters. Yet the work pointed toward the feasibility of higher-scale integration that is important to the auto industry and that focuses on reducing costs of mass-produced parts.

A suite future

The ultimate goal is to replace the early spinning car-top lidars with compact but powerful integrated lidars mass-producible for under US$100. These lidars would form part of a sensor suite that interfaces with a powerful computerized navigation and control system—because, strong as lidar looks, no single technology can do all the sensing needed.

“No matter how good my lidar is, I can’t tell the color of a traffic light,” says Eichenholz. So color cameras will play a role. “Microwaves work much better than lidar in bad weather,” adds Maleki, so microwave radars will be needed, too. Lidars can’t provide the high resolution at close range needed for robotic cars to park themselves in close confines; that’s a job for ultrasonic sensors. And artificial-intelligence software in the car will need to pull all of the input from these sensors together to create a single robotic sensory system—and a whole greater than the sum of its parts.

Those changes will take time. For now, automative lidar, like the whole field of autonomous vehicles, remains in flux. “Many people are jumping into the field and throwing big money into it, and there is a rush of new vehicle lidar companies,” says Dennis Killinger, a pioneer in lidar at the University of Southern Florida. “But major technology changes are still being made.”

Editor's note: This article was updated on 30 December 2017 to correct a misstatement on Luminar's planned production of lidars during 2018.

Jeff Hecht is an OSA Fellow and freelance writer who covers science and technology.