![]()

The Advanced Light Source (ALS) building at the Lawrence Berkeley National Laboratory, Calif., USA. [Image: Roy Kaltschmidt/Photo courtesy of Lawrence Berkeley National Laboratory]

Researchers at the Lawrence Berkeley National Laboratory (LBNL) and the University of California, Berkeley, USA, have shown how to use machine learning and neural-net computing to sharpen and stabilize the X-ray photon beams from state-of-the-art synchrotron light sources (Phys. Rev. Lett., doi: 10.1103/PhysRevLett.123.194801). The technique—which reportedly can reduce beam-width fluctuations to the sub-micron level—will come in handy as upgrades to current synchrotrons, including LBNL’s own Advanced Light Source (ALS), push the boundaries of synchrotron capabilities and the demands for beam-size stability to new levels.

Wigglers, undulators and stability

Modern, or third-generation, synchrotrons work by accelerating electrons to relativistic velocities in huge storage rings. Pieces of the resulting high-energy electron beam are shunted off by multiple “insertion devices,” known as “wigglers” and “undulators,” that apply varying magnetic fields to the beam, forcing out brilliant photons of X-ray synchrotron radiation into beamlines for specific experiments. The insertion devices can even tune the field parameters to refine the characteristics of the resulting photon beam, to suit a particular problem.

Interior panorama of storage ring and beamlines at the Advanced Light Source (ALS). [Image: Roy Kaltschmidt/Photo courtesy of Lawrence Berkeley National Laboratory]

The stability of the X-ray beams coming off of storage rings depends directly on the stability of the electron beams that create them—specifically, the amount of fluctuation in the electron beam’s vertical size. And there’s the rub. Because, as various insertion devices create beamlines to drive dozens of different experiments at the same time, tweaks to refine the light sources at different insertion devices can feed back into the main electron beam, degrading its stability.

Engineers have devised a variety of local and global corrections and feedback loops to combat the resulting beam fluctuations. But the battle for beam stability will become substantially more pitched as so-called fourth-generation, diffraction-limited storage rings, at ALS and elsewhere, come online. These next-gen rings are designed to boost synchrotron brightness by two to three orders of magnitude, and create high, tight coherent X-ray fluxes for techniques, such as ptychography and X-ray photon correlation spectroscopy, that will probe electrochemical systems such as batteries and fuel cells at heretofore unheard-of resolution. But the smaller source sizes will require much tighter control of source-size stability than third-generation techniques can deliver at present.

Neural nets to the rescue

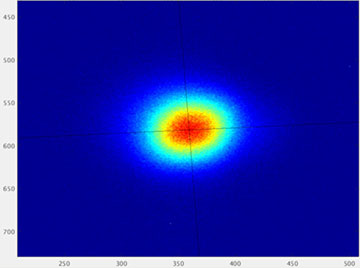

Profile of an electron beam at the LBNL Advanced Light Source synchrotron, represented as pixels measured by a charged coupled device (CCD) sensor. [Image: Lawrence Berkeley National Laboratory]

To get past this stumbling block, the team behind the new research, led by LBNL scientist Simon Leemann, turned to a technique that’s increasingly being applied to a vast range of research problems—machine learning.

Using the current ALS as a test case, the team fed two streams of data from the storage ring—the positions and magnetic perturbations of the various insertion devices, and the vertical width of the electron beam—into a multilevel neural network. The data streams “taught” the neural net to discern how the various insertion-device fluctuations affected the main beam’s stability. Given that knowledge, the algorithm could, in principle, recommend local, real-time corrections that could be applied at the various insertion-device points to minimize fluctuations of the electron beam.

Sub-micron precision

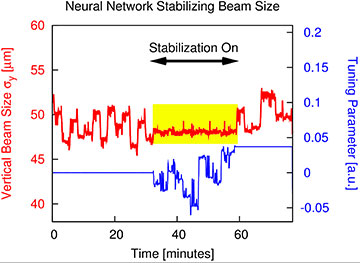

With the neural net trained, the team then put it to work in an actual ALS experimental run. The researchers found that the trained algorithm was able to keep the electron beam well-behaved to vertical-width fluctuations of within 0.2 μm—a precision of 0.4%. That’s an order of magnitude better than the 2%-3% beam-size precision previously achieved at ALS. And the team notes that, as with other machine-learning techniques, putting the system into operation continuously during regular user experimental runs will only improve results as the algorithm continues to learn from the data.

Implementing neural-net machine learning substantially improved vertical beam-size stability during Advanced Light Source experimental runs. [Image: Lawrence Berkeley National Laboratory]

In a press release accompanying the research, Leemann, the team leader, observed that the machine-learning technique allowed the researchers to wade into a problem that otherwise would have seemed intractable. “The problem consists of roughly 35 parameters—way too complex for us to figure out ourselves,” he said.

Leemann added that the team will be actively seeking other arenas in which to test out the approach. “We have plans to keep developing this and we also have a couple of new machine-learning ideas we’d like to try out,” he said.

Meanwhile, for the time being, the techniques the team has developed appear to offer hope to resolve a nettlesome problem for the next generation of diffraction-limited synchrotrons, at ALS and other facilities. That could set up some very interesting science in the years ahead.