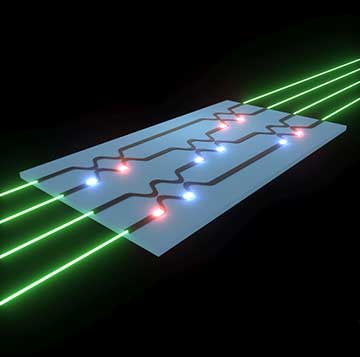

Researchers have shown that a neural network can be trained using an optical circuit (blue rectangle). The laser inputs (green) encode information that is carried through the chip by optical waveguides (black). The chip relies on tunable beam splitters, which are represented by the curved sections in the waveguides. These sections couple two adjacent waveguides together and are tuned by adjusting the settings of optical phase shifters (red and blue glowing objects). [Image: Tyler W. Hughes / Stanford University]

Training an artificial neural network for a specific task can be a computationally intensive and energy-consuming feat. Researchers at a U.S. university have demonstrated that such training can be accomplished on a silicon photonic chip (Optica, doi:10.1364/OPTICA.5.000864).

Stanford University engineering professor Shanhui Fan and his team created an optical analog of a standard machine-learning algorithm, called the backpropagation algorithm. “The backpropagation algorithm is effectively just a method for finding the gradients of the network analytically using the standard chain rule for derivatives, and is the primary method for training neural networks on traditional computers,” says Tyler Hughes, a Stanford graduate student and lead author of the paper.

A new way to train

In previous experiments on optical neural networks, other researchers performed the network training on a traditional computer and then transferred the results onto a photonic chip. Here, the Stanford group performed the algorithm physically by propagating an error signal through the circuits of the chip. According to Hughes, this method “should make training of optical neural networks far more efficient and robust.”

For hardware, the Stanford team used a silicon photonic architecture similar to a programmable processor described last year at the Massachusetts Institute of Technology, USA. Basically, it's a mesh of tiny, tunable Mach-Zender interferometers. For software, the researchers derived the algorithm from the mathematics of the optical circuit, going all the way back to Maxwell's equations.

Fine-tuning

The “teaching” of the network involves sending a laser pulse one way through the optical circuit, measuring how the signal was changed from the predicted signal, then adjusting the circuit and sending the optical signal back. Based on the received signal, the artificial neural network adjusts itself by tweaking its circuitry via optical phase shifters. This tuning happens by “applying an electrical voltage to a heating element on the chip's surface,” says Hughes, “which changes the optical properties of the waveguide slightly.” Tiny photodetectors near the phase shifters measure the intensity of the signal passing through the chip, giving the algorithm the gradient information needed for training and optimization.

“It was challenging to figure out how the gradient information could be read out using these photodetectors, but we invented a clever technique for doing this through interference within the circuit,” Hughes explains. “Once this was figured out, the backpropagation part itself was fairly clear to derive.”

The researchers hope that their work can lead to the automatic optimization of other types of reconfigurable photonic systems as well as optical neural networks.