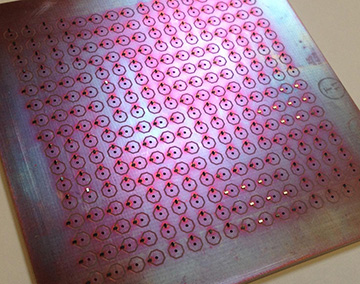

A scheme for locating arbitrary objects in a room via microwaves uses an array of metamaterial cells, such as this one, to shape the microwave fronts. The scattering patterns of the shaped waves can then be tied to mathematical functions related to object positions. [Image: Timothy Sleasman]

Many applications of the Internet of Things, from smart-home sensors to security, will depend on the ability to locate people and objects in a given space. It’s long been known how to do that if the object is tagged and emitting a signal; think, for example, of a big-box store’s ability to track your travels in the store via your cellphone’s Wi-Fi. But nailing down the position of a non-emitting, “non-cooperative” object, or objects, constitutes a much tougher problem—especially when that object is in motion.

A team of scientists in France and the United States now proposes a solution that uses the scattering patterns of custom-shaped microwaves, produced on the fly by a metamaterial antenna, to serve as fingerprints for locating the objects doing the scattering (Phys. Rev. Lett., doi: 10.1103/PhysRevLett.121.063901). Thus far, the system has been tested only in a laboratory demo involving three objects. But the researchers believe that—aided by machine learning to handle complex, evolving room shapes and situations—it can be scaled up to form “the basis for futuristic localization or tracking sensors in smart homes.”

Making a virtue of complexity

At first glance, locating an object even in a simple room through its scattered waves, whether acoustic or microwave, seems a hopeless task, as the wave can take any of a seemingly unbounded number of paths as it bounces around the room. But the French-U.S. team, led by OSA Fellow David R. Smith of Duke University, USA, and visiting researcher Philipp del Hounge from the Institut Langevin in Paris, France, suggests that that very complexity can be turned to advantage in locating non-emitting objects in a particular space.

The key, the team explains, is that information on the position of a given wave-emitter or wave-scatterer within a room is encoded within its so-called Green’s function—a mathematical operator used to solve certain kinds of problems with specific boundary conditions. In principle, every source position is associated with a unique Green’s function, which can be approximated given enough scattered-wave data.

If the Green’s function can be effectively measured and decoded, the location of even a non-emitting, scattering object within a room can, in principle, be inferred by comparing the measured Green’s function to a predefined dictionary of functions tied to specific positions. The Green’s function thus acts as a sort of fingerprint for an object’s location within a given space.

Wavefront shaping to the rescue

The difficulty lies in just how to read that fingerprint, since a given space includes a lot of Green’s functions—and getting at even one of those functions takes a lot of information. One approach might be to set up dozens of antennas in a large room to take numerous measurements at different spots, and thereby approximate the Green’s function of the scatterer. But that’s a costly approach and doesn’t really scale.

Another, the researchers point out, could involve using only a couple of antennas, along with a single broadband signal, and cycling through multiple frequency slices in that signal. Since the Green’s function is frequency dependent, this might allow one to quickly narrow down the specific Green’s function of the scatterer and efficiently look up the relevant position in the function dictionary. But this solution also could well break down in the real world, owing to interference with the cacophony of Bluetooth, Wi-Fi and other RF signals in the environment.

The team led by Smith and del Hounge proposes still another approach—wavefront shaping. Rather than using a single broadband signal and chopping it up, the team chooses a single frequency, and uses a metamaterial antenna, known as a spatial microwave modulator (SMM), to emit waves at that frequency multiple times in quick succession. Each time, the SMM is electronically reconfigured to create a different, randomly shaped wavefront. By repeatedly and rapidly changing the boundary conditions in this way, the system can quickly narrow down the family of Green’s functions, and thus object positions, producing the scattered signal.

Tabletop experiment

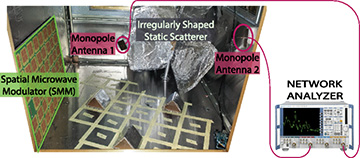

The experiment was set up in a one-cubic-meter box fitted with a metamaterial antenna emitter and two monopole antennas. [Image: P. del Hougne et al., Phys. Rev. Lett. 121, 063901 (2018); Creative Commons Attribution 4.0 International] [Enlarge image]

To demonstrate the method, the team constructed a lab-scale, one-cubic-meter metal cavity. One wall of the cavity included a flat-panel SMM consisting of 39 metamaterial elements for wavefront production and shaping. The cavity also contained an arbitrary static scatterer (to introduce a measure of disorder into the cavity), and two monopole antennas to grab the scattered microwave signal at different points in the “room.”

The researchers then placed three tent-shaped metal objects at any of 23 different positions on the cavity floor, resulting in up to 1,771 possible configurations. The group found that using wavefront shaping to triangulate on the best set of Green’s functions, and then computationally looking up the resulting positions in a dictionary the team had developed for the space through numerical modeling, allowed the object positions to be identified with surprising efficiency—“using up to eight times fewer measurements than there were potential object permutations.”

Scaling via machine learning

The team recognizes that scaling this system up from a simple lab demo to something useful in the real world will mean clearing a number of hurdles. For one, real-world rooms are apt to have a much more complex set of Green’s functions to query. And the configuration of real-world rooms will also tend to evolve in time, which makes the notion of finding arbitrary non-emitting objects within them both literally and figuratively a moving target.

The authors believe, though, that machine learning via deep-neural-net computing, coupled with compressive sensing to cope with the potentially large data volumes, could help handle these complexities. And as these issues are solved and as the approach is scaled up, the group sees a variety of applications of the wavefront-shaping approach to finding objects in a space.

Those could range, the researchers suggest, from better systems for saving energy (for example, improvements to motion detectors for turning down the heat automatically when everyone has left a room), to counting the number of persons in a space, to monitoring breathing patterns in healthcare settings. Indeed, the French members of the team have created a startup company, Greenerwave, to explore commercialization of the technique.