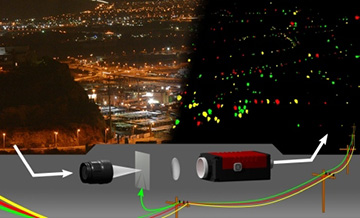

An Israel-Canada team reports that it has used a customized camera and a database of the flickering patterns of light sources to tease out the details of nighttime illumination. [Image: Courtesy of Yoav Schechner]

While we don’t notice it, most artificial illumination includes a very rapid flicker, tied to the frequency of the alternating current (120 Hz, in the United States) that serves as the power source. A team from the Technion, Israel, and the University of Toronto, Canada, now argues that—coupled with the right information and properly tuned equipment—this rapid flicker offers a rich source of information on the nighttime landscape and indoor illuminated environments. The researchers believe that looking at the illuminated landscape from the point of view of its flicker could find use in computational imaging and even in monitoring and assessing a city’s power grid.

Faster than the eye

Perceiving the flicker in the landscape—let alone trying to pull information from it—is a tough proposition in a number of respects. One reason is that, in addition to being imperceptible to the naked eye, the flicker is extremely difficult to capture even on a high-speed camera; getting useful images at the required frame rate of 1000 fps or more becomes all but impossible in low-light situations, such as an illuminated cityscape. The usual alternative for capturing a dimly lit scene, a time exposure, integrates across the flicker time scale and thus can’t provide information about the flicker itself.

Further, it turns out that, though they might be plugged into the same underlying AC power source, the flickering behavior of different bulbs can vary substantially. Different types of AC light sources often don’t flicker in same phase, an intentional design decision to help balance power-grid loads. And the different circuitry and emission mechanisms for fluorescent, incandescent, LED and other types of illumination give individual bulbs very different temporal intensity profiles depending on the bulb type, manufacturer and model. All of this makes analyzing the rapid nighttime flicker in a cityscape, or even in a room with multiple light sources, a very complicated affair.

ACam and DELIGHT

The Israel-Canada research team, including Mark Sheinin and OSA Member Yoav Schechner from the Technion and Kiriakos Kutulakos from the University of Toronto, decided to turn that complexity to their advantage, and try to find a way to harvest information from this diverse data source.

The team first developed a camera prototype that’s specifically tuned to capture the faster-than-the-eye flickering patterns of different lighting. The prototype, dubbed the ACam, begins with an off-the-shelf camera with an electronic shutter that remains open for hundreds of AC cycles—and adds a digital micromirror device that optically blocks the sensor most of the time, unmasking each pixel only at brief instants, with the unmasking timed at twice the AC rate. As a result, the camera takes what amounts to a time exposure—but captures its data at the same points during each AC-driven flicker cycle. That ability lets the ACam pull in sufficient light, and distinguish information on the different components of AC flicker that might underlie a complex nighttime scene.

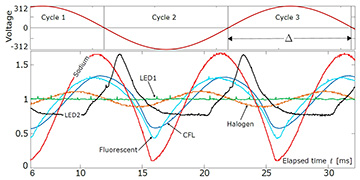

The researchers used experimental data to create a data of bulb response functions, giving characteristic flicker patterns for a variety of common bulb types. [Image: M. Sheinin et al., 2017] [Enlarge image]

As a second key step, the team amassed a database of bulb response functions (BRFs) for a variety of bulb types common in indoor and outdoor lighting. To obtain the data, the team tied a photodiode-based ambient light sensor to a reference AC outlet, and exposed the sensor to each bulb type, taking between 100 and 400 repeated measurements of the photodiode output, and averaging them for each bulb. The result of these measurements is what the researchers call the Database of Electric LIGHTs (DELIGHT), encompassing a range of BRFs across common nighttime light sources.

Monitoring the grid

Putting the DELIGHT database and the ACam camera together offers the prospect of some interesting applications, according to the Technion’s Schechner. One relates to monitoring of the electrical grid, an increasing concern not just practically but in terms of global security. “Using the approaches we’ve outlined, the bulbs become probes to the state of the electric grid,” he says. “They are embedded all over the grid, and react to whatever goes on there.” As a result, he suggests, using “bulb-based methods” such as these could allow power engineers to analyze the electrical grid statistically and to observe and measure nonlinear network effects in the grid that might currently be obscure.

The research team also believes that the ability to read ambient light’s flicker, and to tie it to a specific database of bulbs, could find use in digital imaging. Computer vision and imaging specialists look at overall ambient illumination of a scene in terms of its so-called light transport matrix, which represents a linear combination of the contributions from each individual light source. Using the ACam to capture the scene and the DELIGHT database to interpret it, the team says, allows the individual light sources to be identified, weighted, and digitally subtracted out, or unmixed, from the scene.

This, in turn, could facilitate a variety of ways to tweak digital images. For example, a movie scene could quickly be digitally “relit,” to give an idea of how it might look if illuminated with a different type of bulb. And the indoor reflection of the photographer, when taking a picture through a window of an exterior, naturally illuminated scene, could be removed by subtracting out the AC-illuminated component of the transport matrix.

The team (along with Yoav Levron, another Technion scientist) has applied for a patent on the ACam, the DELIGHT database, and a number of other aspects of the technology. Schechner says the team is also working on several improvements, including “more clever masking and signal processing” as well as more efficient capture in the ACam, to allow for a higher-quality result with a somewhat shorter exposure time. The authors will present the paper (currently available at the Technion website) this Sunday, 23 July, at the IEEE Computer Vision and Pattern Recognition conference in Hawaii.