Feature

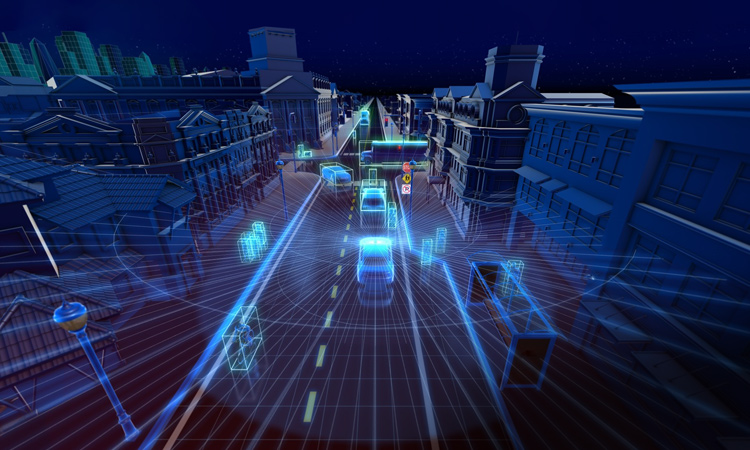

Integrated Lidar: Transforming Transportation

On-chip silicon photonics technology is enabling smaller, cheaper, better distance-ranging sensors to realize safe, ubiquitous vehicle automation and more.

[Velodyne]

[Velodyne]

The race to develop safe, inexpensive, fully autonomous vehicles is proceeding at full throttle, and photonics is destined to play a central role. In addition to radar and sonar, self-driving cars will undoubtedly rely heavily on photonics technologies found in new cars today, including cameras, LEDs, diodes, displays and optical sensors that help drivers park, stay in their lane and maintain speed.

…Log in or become a member to view the full text of this article.

This article may be available for purchase via the search at Optica Publishing Group.

Optica Members get the full text of Optics & Photonics News, plus a variety of other member benefits.