Vision sensors have become part of our everyday lives. They can be found in cell phone cameras, notebook webcams, digital cameras, video camcorders and surveillance equipment. And increasingly, they are being extensively investigated for the global automotive industry. By capturing and processing live images with panoramic views and depth cues, vision sensors can play an important role in vehicle safety. They can be used to help drivers to detect other cars, pedestrians and obstacles as well as to alert them to quickly stop or slow down.

The ten-year period between 2010 and 2020 was recently declared the “decade of action” for road safety by the United Nations General Assembly. This article discusses automotive vision sensors in the context of Indian roads. The streets of India provide perhaps the ideal “road test” conditions, given their abundance of potholes and bumps combined with almost zero adherence to traffic rules.

With a total area of 3.28 million sq. km., and a population of 1.13 billion growing at 1.4 percent per year, India is one of the fastest growing economies in the world. In addition, it is slowly emerging as one of the biggest markets for vision sensors. For example, the digital camera sector—which relies on the sensors—is valued at INR 17.5bn during the fiscal year 2010 and is expected to attain a compound annual growth rate of 43 percent to reach INR 104.6bn by 2015.

Unfortunately, road safety in India has deteriorated in recent years. According to the Society of Indian Automobile Manufacturers, domestic sales in the passenger and commercial vehicle category have increased from 1,380,002 in 2004 to 3,196,829 in 2010. Unfortunately, however, the expansion of the road network has not kept pace with that growth, leading to a 130-percent rise in the number of automobiles on Indian roads.

Traffic in Kolkata, India.

Traffic in Kolkata, India.

|

Sensor technology

The vision sensors used in cameras are typically of two types: They are based on either a charge coupled device (CCD) or complementary metal oxide semiconductor (CMOS) technology. The vision or image sensor is similar to photographic film, which, when exposed to light, captures an image. It converts light into an electronic signal that is then processed and displayed. Both CCDs and CMOS sensors use arrays of millions of photo-detection sites, which are commonly referred to as pixels.

CCDs were invented in 1969 by George Smith and Willard Boyle at Bell Labs. A CCD is a simple series connection of metal-oxide-semiconductor capacitors that can be charged by light. It can hold a charge corresponding to variable shades of light, which makes it useful as an imaging device. The charges accumulated are transported from one capacitor to the next until it reaches the output node where the charges are converted into voltage and measured.

CMOS was introduced as a vision sensor technology in the early 1990s. With CMOS, a photodiode is typically used to generate charges, which are immediately converted into a voltage at the pixel.

CCDs are less susceptible to noise compared to CMOS and thus create higher quality, lower noise images. However, they have very limited output nodes, and they must be read out completely, making them inconvenient for region-based readouts affecting the output bandwidth of the sensor. They also require supporting circuits, which increase the size and complexity of the camera design, thereby increasing cost and power consumption.

CMOS image sensors, on the other hand, are much less expensive and allow for the integration of processing circuitry with the photoreceptor. They allow for random accessibility, enhancing output bandwidth.

State of the industry

Image sensors have come a long way over the years. CCDs dominated the market for a long time due to their excellent performance. However, in the past two decades, the cheaper CMOS vision sensors have come to be comparable in performance. They are increasingly being used in applications that once relied on CCDs, including space sensors, machine vision sensors, video cameras, digital single-lens reflex cameras and smart phones. The economics of the vision sensor market is showing tremendous growth potential.

In automotive applications, CMOS vision sensors are the preferred choice. They meet the special requirements of the industry, including:

-

They operate in adverse weather conditions and extreme illumination;

-

They have a high field of view; and

-

They exhibit an extremely high dynamic range—that is, they must enable objects to be visualized in the dark as well as bright light.

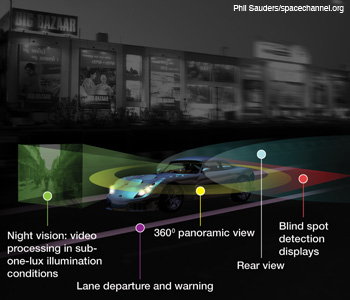

Possible research areas in automotives.

Possible research areas in automotives.

CMOS vision sensors are able to operate at temperatures up to 85° C, and they provide for on-chip dynamic-range-extension techniques, making them the ideal choice for automotive applications.

Automakers began integrating vision sensors into their luxury models about a decade ago. For example, the Toyota Prius has an intelligent parking assist system that comes with a rear-view camera that can help the driver park. XVision from Bendix Commercial Vehicle Systems is an infrared system that allows drivers of commercial trucks and busses to drive at night efficiently.

Currently, however, these sensors are limited to high-end vehicles. Although there are vision sensors available today for less than $5 U.S., the processing and assembly required for automotive applications make the overall system costly. They would not be easily accepted by Indian drivers. Indian vehicles and road conditions require indigenous solutions, and this opens up immense vision sensor research prospects in the country. While this type of research is almost negligible in India at present, I believe there is great potential here. Custom-designed indigenous vision sensors could help reduce the cost for the Indian markets and would greatly benefit Indian society. Here we look into some of the areas of research in the automotive industry in which vision sensors can play a crucial role.

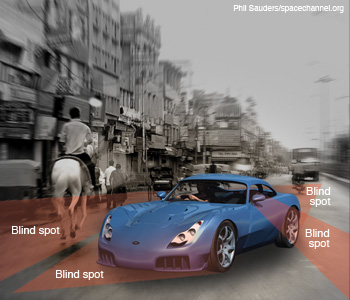

Typical vehicle blind spots.

Typical vehicle blind spots.

The 360° panoramic and rear view

Panoramic views are characterized by a very large horizontal field of view. They are used to generate a 360° view of a scene and to help eliminate a vehicle’s “blind spot”—an area either in front of or behind the vehicle that is not visible to the driver. Rear mirrors and wide-angle lenses help the driver to an extent but they do not completely eliminate the blind spot.

Large vehicles have huge blind spots that can make a smaller vehicle completely invisible. When one is driving on Indian roads, where driving rules are hardly respected and often vehicles overtake from both the left and the right, rearview mirrors become extremely important. However, in India, drivers often fold the side mirrors to prevent damage from the passing vehicles in densely packed traffic, thus defeating their purpose.

This is where vision sensors can help. These cameras are usually mounted on either the license plate or the rear bumper. They enable the driver to see the rear view of the automobile. They are particularly useful when one is reversing or parking—a process that requires a high degree of precision in India, where there is a scarcity of available parking spaces.

HCL Technologies in India produces rvAid panoramic rear view safety camera for automobiles. The rvAid solution consists of four cameras—two embedded in the vehicle’s rear bumper and two in the side view mirrors—that capture and combine real-time video footage to provide a 190° degree panoramic view and drastically minimize blind spots.

The Unisee Vehicle Multiple camera system found in BMWs also uses many cameras to help drivers to visualize the blind spot. However, as stated earlier, these systems are complex and expensive, so there is a real need for low-cost and simple operational systems. The automobile industry can contribute to research on low-end rear-view cameras that can be universally adapted for vehicles in India. It would not require much funding from the automobile industry in India and would yield substantial societal benefits.

The multiple camera system can be replaced with cameras that have fish-eye lenses to obtain a 360° view. The complete panoramic vision allows the driver to control and navigate the vehicle smoothly. However, the images captured with any multiple-lens optical system will suffer from image distortion, as the mapping of spherical angular distribution on the square detector will lead to an overlapping of the field of view.

This loss of image resolution requires additional processing of the visual information. A distortion-correction lens usually adjusts the image distortions and produces superior image quality. However, fabrication of these lenses is a challenge. On-chip optical distortion correction would help to improve image quality without the need for a distortion-correction lens.

Algorithms must be implemented on-chip to correct these distortions. Implementing a multichannel imaging system is significantly cheaper than following the conventional approach of using a multiple single-axis camera. The possibility of on-chip image correction and reconstruction from the multiple sub-images would drastically reduce the bandwidth of the sensor, contributing to low-power-mode operation. Such a system would enable us to reduce the volume of the imaging optics and pave the way toward a compact image capturing system. It could thus be easily integrated into Indian vehicles.

Night vision system to detect moving objects. (Top) View with standard car headlights. (Bottom) Enhanced vision using thermal sensing.

Night vision system to detect moving objects. (Top) View with standard car headlights. (Bottom) Enhanced vision using thermal sensing.

Night vision

Traffic-related deaths are three-fold higher at nighttime than during the day, according to the National Safety Council. For one reason, the glare from lights interferes with normal vision. In addition, it is often difficult to detect pedestrians and animals in the evening, as well as the distance and speed of objects moving at night. Finally, one is also more likely to encounter both drunk drivers and inebriated pedestrians during the evening hours.

Vision sensors must have a very high dynamic range to operate in both dark and direct sunlight. Compared with CCDs, CMOS sensors can have integrated dynamic-range extension techniques and are thus preferred. However, they have very limited dynamic range in the dark; for that reason, an infrared sensor must typically be used as well.

Night vision systems use infrared (IR) CCDs/CMOS to project the image to the driver. There are two technologies available on the market: passive infrared, which includes far-infrared (FIR) and near-infrared (NIR) systems, and active infrared. In an NIR system, infrared radiation is directed in front of the vehicle via an infrared beamer. The rays are reflected back after striking objects in the path of the vehicle and then converted into an image for display on the dashboard.

FIR instead uses the difference in heat, or infrared radiation, emitted by various objects; it does not need an infrared beamer. BMW’s Night Vision is an example of a FIR-based system. It uses FLIR systems with a thermal imaging camera. Thermal imaging can be used to detect people or obstacles at a range of about 300 m, thus identifying moving objects in front of a car at a very early stage and giving the driver more time to react. Standard headlights allow vision extending 300 to 500 feet, while thermal imaging enhances the driver’s vision fivefold—to more than 1,500 feet.

Despite this, night vision systems are not yet very popular because they are costly and they do not work in adverse weather conditions such as fog or heavy snow. In order to develop vision sensors with good performance at night, engineers can look to nature. For example, flying insects have a very high sensitivity in low light. To maximize the available light in dark, insect eyes use spatial or temporal summation by increasing the exposure period.

The dynamic range of a vision sensor can be increased in many ways. Research in this area could be coupled with studies of insect vision in order to develop low-cost, high-dynamic-range camera systems for automobile applications. These sensors, coupled with signal processing for “intelligent” image sensing, would be a valuable addition to vehicles.

Fog is very common in Indian winters, often reducing visibility to less than 50 feet. Often traffic gets stalled in the wee hours of the morning due to heavy fog. Driving in these conditions can be dangerous and even fatal. Fog lights can help, but not all vehicles are equipped with them.

Nature again offers solutions: For instance, fish move easily through a dense aquatic fog. They use polarization to improve the contrast of the imaged scene; this helps them to navigate smoothly. Among the three characteristics of light intensity, color and polarization, polarization provides the most general description of light. Thus, it can provide richer sets of descriptive physical constraints for the interpretation of the imaged scene.

The research community has recently shown interest in polarization-detection devices, and scientists have designed and demonstrated such real-time sensors. The detection of linearly polarized light (the most common type found in nature) requires linear polarizers, which are directly integrated on photosensitive vision sensors, enhancing the compactness and speed of measurements. The integrated micropolarizers can be made either from organic materials or by using the metallic wire grid available with standard CMOS technology.

The advantage of the latter is that it comes for almost free with the CMOS process. This type of sensor can easily detect the degree of polarization with reasonable sensitivity. The benefits of being inexpensive and easy to integrate with the vision sensor would immensely help to make them popular for imaging in foggy conditions. However, more research is needed before polarization-based vision systems will become a reality.

3-D imaging, lane departure and motion detection

Three-dimensional imaging is being actively pursued within the automotive sector. Researchers are investigating it for pedestrian safety applications, pre-crash detection and lane detection, among other areas.

In order to predict crashes, one must be able to reliably estimate the time-to-collision between moving objects. Currently, infrared imaging is used for this purpose: It scans front and back to identify and estimate the approximate distance to other vehicles and the presence of moving objects in the path and lane markers. However, it cannot be done with conventional CMOS vision sensors and needs dedicated sensors.

The “pre sense city” marketed by Audi AG uses a photonic mixing device to measure distances in 3-D and to apply the brakes intelligently. The system can reduce speed by as much as 30 km/h, thus minimizing collision impact and damage. The system can detect pedestrians at a distance up to 20 m, thereby increasing the driver’s response time to prevent a collision. The 3-D cameras are also being investigated for so-called out-of-position driver detection, which measures the position and posture of the driver and passenger for “smart” airbag deployment.

Three-dimensional images are generated by combining the 2-D perspective image with the distance of object information. Any conventional camera can be used to obtain the 2-D perspective image, and distance is computed using time-of-flight information, interferometry and optical triangulation.

These methods for distance estimation require dedicated image sensors. In time of flight, an object is illuminated by an intensity-modulated light source. The reflected optical power on the vision sensor matrix is then used to obtain the relative distance of the object from the vision sensor. Time-of-flight sensors usually show a high-distance measurement quality. Interferometric measurements are more accurate; however, they must be performed in a controlled environment and not over distances greater than a few tens of meters.

In optical triangulation, a laser diode projects a light spot onto the surface of the object. The reflected light is then imaged and evaluated for distance. The difference of this principle with that of time of flight is that it does not use a modulated light source. Optical triangulation techniques are better applied to short-range measurement. However, these systems are not suitable for compact real-time realizations, as they require too much computation, resulting in an increased response time and system cost.

In India, the lane markers act as a guide to help vehicles stay in their lane and to assist them in passing and turning. However, it’s not uncommon to see vehicles driving over the dotted white lines, keeping others on the road guessing as to which way the driver might turn. Given the volume and behavior of Indian drivers, people typically have very little time in which to react to unexpected pedestrians or animals coming into their field of view.

Since costly systems such as the pre sense city would not be practical for most Indians, there is a need for sensors that are low-power, small size, cost-effective and simple to operate. One possibility is a technology inspired by flying insects, which can detect obstacles in their flight path efficiently with little computational power by using something called “optic flow,” which creates a flickering effect in their eyes. Optic flow is the pattern of apparent motion of objects, surfaces and edges in a visual scene caused by the relative motion between an observer and the scene.

Optic flow usually contains information about self motion and the distance to potential obstacles. Its computation can be simplified and used to predict vehicles or pedestrians coming into a moving vehicles’ field of view. It would be reliably fast and not very costly—and could be easily adapted in vehicles for Indian roads.

In sum

CMOS vision sensors are highly beneficial for the automotive sector. The industry is moving towards technologies that provide panoramic vision and/or three-dimensional imaging, along with night vision ability and enhanced signal processing in order to allow for intelligent decisions within sensors. The result will be safer roads in India and around the world. The challenging specifications of Indian streets offer plentiful opportunities for developing and testing devices. When it comes to driver safety, if you can make it here, you can make it anywhere!

Mukul Sarkar is an assistant professor in the department of electrical engineering at the Indian Institute of Technology, Delhi.