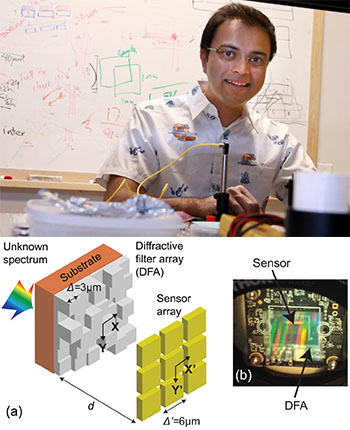

(Top) Rajesh Menon, who, with grad student Peng Wang, has designed a new approach for capturing color information on digital cameras. (Bottom left) Each pixel in the sensor array is overlain by a unit cell of a transparent filter array that diffracts incident light into an intensity pattern on the sensor. The pixel color for the final image is then backed out through a computer algorithm. (Bottom right) Photo of the diffractive-filter/sensor assembly. [Images: University of Utah College of Engineering/Dan Hixson (top); P. Wang and R. Menon, Optica, doi: 10.1364/OPTICA.2.000933 (bottom)]

The ubiquitous smartphone camera is a miracle of miniaturization and technology. But even these cameras don’t perform that well in low-light situations, in which the images captured can be dark, uneven and grainy.

“Low-light photography is not quite there yet,” says Rajesh Menon, a professor of electrical and computer engineering at the University of Utah (USA). And, along with grad student Peng Wang, Menon has proposed a way to fix that: a combination of a transparent, diffractive color filter for the camera, and computational optics to back out the true colors from the diffracted filter signal (Optica, doi: 10.1364/OPTICA.2.000933).

From absorption to transmission

Digital cameras have trouble in low light because the conventional filter arrays used to pass along color information work, in a sense, by blocking light rather than transmitting it. In a conventional camera, each spatial pixel in the camera image sensor (CMOS or CCD) is overlain by an array of sub-pixels, each of which transmits one of the primary colors—red, green or blue—while absorbing the other two. By definition, that means that in most situations the filters are blocking two-thirds of the available light, and the image quality will degrade quickly when there isn’t much light to go around.

Wang and Menon have demonstrated a very different approach. First, for each CMOS or CCD sensor pixel, they replace the overlying color subpixel array with a completely transparent, diffractive filter array around a micron thick, with the filter designed to provide a unique diffracted intensity pattern for every wavelength. The light traveling through the filter, a mix of wavelengths, thus creates a specific intensity distribution that’s read by the sensor array.

At that point, the phone’s software takes over: Using the team’s calibrations of the filter’s expected intensity response as a function of wavelength, an optimization algorithm maps the intensity signal on the sensor pattern to the most likely appropriate pixel color for the final digital image.

Sensitive and scalable

A big advantage of the system for low-light situations, of course, is that it allows substantially more light to get to the image sensor; Wang and Menon say their experiments revealed that the sensitivity can be enhanced by a factor as high as 3.12 times. The filter also should be easy to manufacture, according to the team, as it’s fabricated with standard grayscale lithography and imprinting techniques. And because it’s transparent and the intensity distributions have been calculated for multiple wavelengths (rather than just red, green and blue), Menon suggests that the filter can provide “a more accurate representation of color.”

According to a University of Utah press release, Menon is now attempting to commercialize the filter for smartphone use through a new company, Lumos Imaging. But he notes that the technology could have applications well beyond better low-light selfies. For example, the study included a simple experiment suggesting that the system could be used for single-shot hyperspectral imaging. And the team believes that the technology could be employed by industrial robots, drones, self-driving cars and other systems in which precise, fast and automated color analysis is necessary. “In the future,” says Menon, “you need to think about designing cameras not just for human beings, but for software, algorithms and computers.”