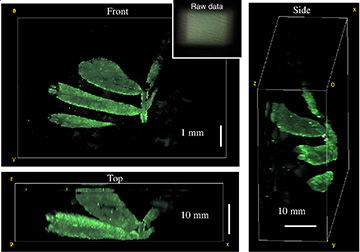

Researchers use the DiffuserCam to reconstruct the 3-D structure of leaves from a small plant. The image grid has been cropped to 480×320×128 voxels. [Image: Laura Waller / University of California, Berkeley]

Laura Waller and her team from the University of California, Berkeley, USA, report developing a lensless camera made from a sensor, a sheet of bumpy plastic and sophisticated algorithms that can produce high-resolution 3-D images from a single 2-D image (Optica, doi: 10.1364/OPTICA.5.000001). In a demonstration of the camera system, the team says it was able to reconstruct 100 million voxels (3-D pixels) from one 1.3-megapixel 2-D image. The researchers say the new camera, called the DiffuserCam, is “cheap, compact and easy to build” and could prove useful in applications ranging from driverless cars to brain imaging.

Simple hardware + complex software

Similar to a traditional light-field camera, the DiffuserCam uses a sensor, diffuser and computational software to create 3-D images from a 2-D image without scanning. However, the UC Berkeley design swaps the typical diffuser—a precisely aligned and expensive microlens array—for a cheap, bumpy sheet of plastic that captures the amount and angle of light hitting each pixel on the sensor.

The DiffuserCam can get away with using a randomly patterned diffuser because the system’s sophisticated computational-imaging software can be calibrated to compensate for any uneven surface. The user simply needs to capture a few frames of light moving across the diffuser before imaging an object. (Waller and her colleagues tested the calibration process using diffusers made of privacy glass stickers, Scotch tape and conference badge holders.)

In fact, the researchers say that a randomly patterned diffuser actually improves DiffuserCam performance. When inputted into their new, nonlinear reconstruction algorithm, data from an uneven diffuser surface enables compressed sensing that eliminates the typical loss of resolution that comes with microlens arrays.

Computational camera performance

To determine the size and location of the sampling grid for their imaging demonstration, Waller and her colleagues first analyzed the DiffuserCam’s resolution, volumetric field-of-view (FOV) and the validity of their computational convolution model. Of the 2,048×2,048×128 voxel FOV, they identified the central 100 million voxels as the usable portion for the sampling grid. Next, they used the DiffuserCam to reconstruct 3-D images of a small plant from multiple perspectives. (The researchers note that the effective resolution varies significantly depending on the complexity of the object being imaged.)

The team hopes to upgrade the DiffuserCam design by using raw data to eliminate the calibration step, as well as improving the accuracy of their software and speeding up the 3-D image reconstruction process.