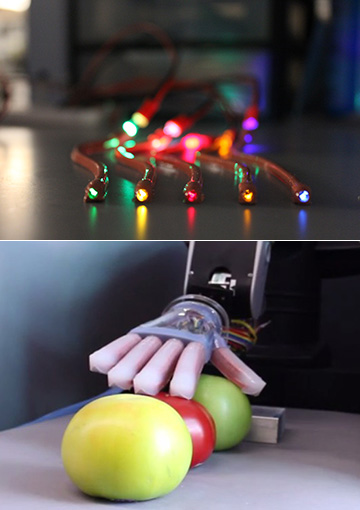

(Top) The strain sensors consist of flexible, elastomer-fashioned multimode fibers for which the amount of stretching, bending, or pressure can be inferred from optical loss through the waveguide. (Bottom) Among other tasks, a strain-sensor-equipped prosthetic hand was able to distinguish the ripest of three tomatoes by pressing lightly upon it. [Image: Huichan Zhao, Organic Robotics Lab, Cornell University]

An engineering team led by Robert Shepherd of Cornell University, USA, has fashioned photonic strain sensors out of easy-to-fabricate elastomer waveguides, and used them to provide fine-scale tactile feedback to a soft, flexible prosthetic hand (Sci. Robot., doi: 10.1126/scirobotics.aai7529). The waveguides, capable of sensing textural differences at the micrometer scale, enabled the hand to accomplish a number of tasks—including picking the ripest tomato from a group of three in multiple trials.

The need for flexible sensors

In recent years, soft prosthetics, driven by fluidic mechanisms such as compressed air, have garnered a lot of attention as a more realistic, mobile and lifelike alternative to bulkier, motor-driven prosthetic systems. But the sticking point has been getting tactile sensors into such soft prosthetics. While motor-driven prosthetic systems have developed sophisticated sensing functions through such add-ons as multi-axial force/torque load cells, those additions tend to be rigid and difficult to implement in a soft, flexible hand. And more flexible, stretchable electrical sensors can be unreliable, difficult to fabricate, and not always safe to use.

The Cornell group sought a way around those problems through an optoelectronic approach—which it achieved by putting together some recent advances in optical waveguides, 3-D printing, soft lithography and soft robotics. At the heart of the team’s system are multimode, stretchable step-index optical fibers that are “fabricated to be intentionally lossy”—with the amount of light loss increasing the more the waveguide is deformed. An LED at one end of the fiber provides the guided-light source; a photodetector at the other end reads the light that gets through to the end of the fiber, allowing the light power loss to be mapped to the relative strain in the waveguide.

Optoelectronic “nerves”

The team created the fiber by surrounding a high-index elastomer core with a lower-index elastomer cladding; the total cross-sectional area of the waveguide assembly is around 4 mm2. The fabrication method is a simple mold-based approach, involving pouring the cladding and core materials in layers into a pre-formed mold and allowing them to cure. (Interestingly, the team used 3-D printing to create the molds—an approach that, according to the researchers, allows “design freedom” to quickly and cheaply create more complex shapes as required.)

Next, with the waveguides formed, the team set about characterizing their actual response to various kinds of deformation—elongation, bending, and the pressing force that would be experienced by a fingertip. With those detailed data in hand, the researchers proceeded to a proof of concept, snaking the fibers like nerve filaments through the fingers of a soft, pneumatically controlled robotic hand.

The tomato test

They then put the hand through a series of tests with different materials and situations, using the haptic feedback from the optoelectronic strain sensors to guide the hand’s actions in tasks involving shape and texture detection, softness detection and object recognition. Using the fiber optic sensors, for example, the hand was able to distinguish differences in textural roughness on the order of 100 µm in various materials—sensitive for this kind of application (albeit nowhere near the nanometer-scale sensitivity of a human finger).

In the most photogenic of the team’s demonstrations (documented in a YouTube video), the researchers lined up three tomatoes of varying ripeness. The robotic hand, pressing gently with its “index finger” on each of the three, was able to pick out the most yielding, and hence ripest, tomato in multiple tries, based on the optical loss read in the waveguide.

The authors of the study acknowledge that the prototype hand “still has many aspects that can be improved on”—for example, via a higher sensitivity by using high-power laser diodes instead of LEDs as a light source, and through machine-learning techniques to refine the calibration and overcome artifacts such as signal coupling across sensors. Nonetheless, the team believes that this first demonstration shows the potential of stretchable waveguide sensors to help move soft-prosthetic development forward.