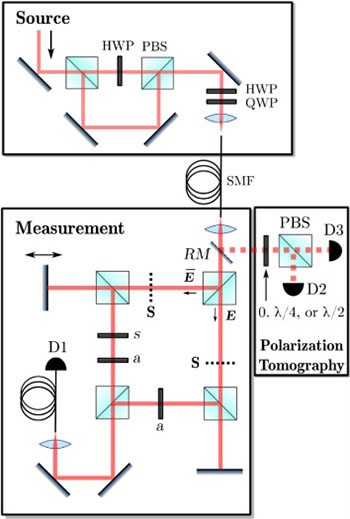

Experimental setup, involving classically entangled light, used by the Rochester team to test the Bell inequality. [Image: Qian et al., Optica, doi: 10.1364/OPTICA.2.000611]

The phenomenon of entanglement—the “spooky action at a distance” so memorably described by Albert Einstein—seems almost synonymous with the quantum realm. But it’s been known for years that entanglement, albeit of a conspicuously local variety, can exist in non-quantum light fields as well, a fact that has made the classical-quantum boundary a bit fuzzy on the map.

One key measure, the so-called Bell inequality, has long been used by physicists to as a guidepost for drawing the line between classical and quantum systems. But some recently published experiments now show that the inequality actually fails to mark the classical-quantum divide for classical light fields when those fields include a dose of entanglement. Indeed, in the view of the researchers behind the study, the inequality can no longer reliably be used to mark that boundary at all (Optica, doi: 10.1364/OPTICA.2.000611).

A long-standing quantum litmus test

Bell’s inequality originally grew out of a reaction in 1964 by physicist John Bell to the celebrated “EPR paradox.” In that construct, Einstein, with coauthors Boris Podolsky and Nathan Rosen, argued that, in light of entanglement, quantum mechanics couldn’t be considered a “complete” theory of reality, and must embody “local hidden variables” that can explain entangled action at a distance, and thereby provide a deterministic footing for the theory.

Bell’s response was a theorem showing that local hidden variables cannot reproduce the entanglement correlations predicted by quantum mechanics. He reduced the theorem into a specific, much discussed relationship, the Bell inequality.

Several years later, in a study in Physical Review Letters, John Clauser and several colleagues proposed that the Bell inequality, in a modified form, could act as an indicator of a system’s quantum strength. Since then, physicists have commonly understood “violations” of Bell’s inequality as a signal that a system’s behavior is governed by quantum rather than classical physics.

The Bell paradigm broken?

A team of scientists led by Joseph Eberly of the University of Rochester (USA) put Bell’s paradigm to the test by using a light field that, while classical, had been carefully prepared to display the phenomenon of entanglement (specifically in the parameter of polarization). Measurements were applied to the entire classical field, not to individual photons as in quantum entanglement experiments. (The existence of classical entanglement has been known for more than thirty years, but it is a local phenomenon and thus has not proved as captivating to the physical community as “spooky” quantum entanglement.)

Upon plugging the results into the Bell inequality, the Rochester scientists derived a “Bell parameter” of 2.54, “many standard deviations” outside the inequality’s theoretical limit of 2. That points to a clear violation of the Bell inequality. In sum, the Bell inequality had judged a classical system as a quantum system, and thus had failed as a guidepost for the quantum-classical boundary.

The authors of the study suggest that the results require a rethinking of the Bell inequality as a marker separating classical systems from quantum ones. Instead, it may simply gauge the importance of entanglement, be it classical or quantum. “Bell violation has less to do with quantum theory than previously thought,” the authors conclude, “but everything to do with entanglement.”