In NASA’s “Destination: Mars” exhibit, which uses Microsoft’s HoloLens mixed-reality headset, Apollo 11 astronaut Buzz Aldrin stands within a panoramic view of the martian surface. [NASA/JPL-Caltech/Microsoft]

After decades of development and years of false starts, the time for 3-D head-mounted displays seems to have come at last. Schools are now testing an app, called “Expeditions,” that uses the cheap Google Cardboard attachment to take students on virtual field trips with their smartphones. Theme parks are reviving their aging roller coaster rides by adding virtual-reality (VR) headsets, so riders can take a synchronized tour of outer space or fly along with Superman.

Dedicated headsets that put gamers into the middle of 3-D worlds were the talk of the Game Developers Conference that took place in March 2016 in San Francisco, and that attracted 26,000 people. And also in March, the highly publicized, widely praised Oculus Rift head-mounted display, from Facebook, began consumer deliveries.

Yet an old question lurks behind these new scenes: Is the new technology good enough to protect users from the nausea-inducing effects, similar to motion sickness, that have been show-stoppers in the past? VR depends on optical tricks to generate the illusion of 3-D worlds and motion, but those tricks can’t fool all the senses—and eventually our sensory systems tend to rebel. Even 3-D movies and television are enough to make some people sick. Will isolating the user still further from reality make things even worse?

After decades of development and years of false starts, the time for 3-D head-mounted displays seems to have come at last.

Deja vu

The new wave of VR enthusiasm is giving veteran technology watchers a queasy feeling of deja vu. Back in 1991, Sega, then a major maker of video games, announced plans for what then seemed the next big thing in electronic gaming: an immersive gaming system called Sega VR. The concept—involving a pair of headset-mounted, computer-driven liquid-crystal screens that showed a virtual world in 3-D stereo—got wide publicity, but its schedule kept slipping. The last public demonstration was at the 1993 Consumer Electronics Show.

When it later abandoned development, Sega said it was because players had become so “immersed” that they could injure themselves. But the real story was that then-available screens could display only 4 to 12 frames per second (fps)—much too slow to keep up with users turning their heads. In fact, that frequency was particularly effective at making users sick, said Tom Piantanida, a now-retired member of a consulting team that tested the goggles, who called the 4-to-12-fps rate the “barfogenic zone.”

The problem was far from unique to VR. The U.S. military discovered simulator sickness soon after introducing helicopter flight simulators in the mid-1950s. Like motion sickness, it comes from having the eyes see motion that’s different from what the vestibular system feels in the inner ears.

Military pilot trainees are highly motivated and can usually adapt. Entertainment is a different market.

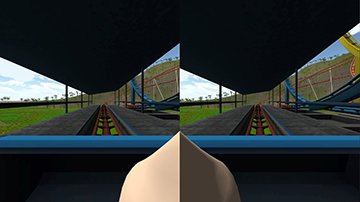

Theme parks are experimenting with new or revamped roller coaster rides, in which riders take a virtual-reality trip synchronized with the twists and turns of the ride. Riders fly virtually through scenes like the one at left as their bodies zoom through the ride. [© Six Flags Theme Parks Inc., 2016]

Conquering latency

In the wake of its early experience in the 1990s, the gaming industry backed away from developing consumer VR. Motion sickness was “a huge problem,” says David Whittinghill, who heads the Games Innovation Laboratory at Purdue University, West Lafayette, Ind., USA. “A large percentage of people we have tried to work with have some level of sickness.”

The central problem for headsets, he says, is the need for a very close match of sensory input from the eyes and the vestibular system. The longer the delay in delivering a new frame, measured as latency, the more the discrepancy when the head moves quickly. Research has shown that at least 90 frames should be delivered in a second to keep that discrepancy within tolerable levels.

Reaching that rate is a challenge. “A lot of computation is going on” to display the computer-generated images used in games, says Whittinghill. Calculations for a single frame in a conventional game take about 1/30thof a second; thus standard development tools are designed to generate 30-fps scenes. 3-D gaming requires separate frames for each eye, and the computations for both frames must be completed in a much faster 1/90th of a second. Gaming already requires powerful computers, so raising the frame rate implies reducing the complexity of scenes to speed the computations.

The key breakthrough grew from a series of online-forum discussions about improving the quality and reducing the costs of headsets. That led Palmer Luckey, then 18, to design and build a low-latency prototype in his parents’ garage in Long Beach, Calif., USA, in 2011. Crucially, he used commercial, off-the-shelf hardware, greatly reducing costs while improving performance. Luckey went on to cofound Oculus VR, which raised US$2.4 million on Kickstarter in 2012. In 2014, Facebook bought Oculus VR for US$2 billion in cash and stock.

Other developers jumped in with their own projects. The first to emerge were simple optical systems that relied on smartphones to provide both the displays and the computing power. Dedicated headsets, designed for use on powerful computers or game consoles, followed this year, including offerings from both Oculus and competitor HTC Corporation. Both companies have set strict minimum requirements for computing speed. But, says Whittinghill, consumers may still run games on less powerful machines that can’t keep up with the action at 90 fps—so users could get sick.

Vection and vergence

In addition to a minimum frame rate, headset developers have also set a second key principle for 3-D games: they should be designed to minimize movement of the user’s viewpoint—an effect called vection. That means cutting back on some staples of video-game graphics, such as flying or running along with a hero shooting, or dashing through a series of levels within a maze.

The problem with vection comes from the disparity between what the eyes and the vestibular system tell the gamer. The eyes show forward movement, often at high speed, but the vestibular system feels no such motion. That’s a problem, because many of today’s most popular video games move the user’s viewpoint around to explore the environment or chase bad guys. One way to reconcile that disparity is by moving other objects—so that, for example, the enemy charges toward the player rather than the player toward the enemy.

But there’s another possible solution to the vection problem: Actually putting the user in motion, and syncing up the user’s VR viewpoint with that physical movement. As long the movement sensed by the eyes matches the movement sensed by the vestibular system, the body should remain happy—at least in theory.

Some theme-park operators are betting on that approach by overhauling existing roller coasters, or building new ones, and equipping them with VR headsets. Riders feel the twists, turns, rises and falls of the roller coaster as their headsets follow the same virtual path on screen. At the Alton Towers theme park in Staffordshire, England, U.K., visitors tour the universe on the park’s new Galactic ride. Six Flags Entertainment is converting six roller coasters to space rides and three to Superman rides. Six Flags design director Sam Rhodes told IEEE Spectrum that adding VR can give a new life to aging roller coasters, without having to spend millions building a new ride.

A separate optical issue with 3-D imaging is vergence, the movement of the eyes in opposite directions to fuse what they see into a single binocular image. The eyes sense that movement separately from their sense of accommodation (that is, how much the eye has to change its focal distance to bring an object into focus). The two cues can conflict if the actual distance of the object differs from the apparent distance—and the mismatch is larger when the actual focal point is on a screen close to the eye in a VR headset.

Choose your reality

Three variations on the theme of stereo-3-D headsets.

Dedicated virtual-reality system

Dedicated virtual-reality system

How it works: Headset, connected to high-performance computer or game console, generates stereo images at 90–120 times per second.

Example devices: Oculus Rift; HTC Vive; Sony VR headset

Smartphone virtual-reality system

Smartphone virtual-reality system

How it works: VR input comes from user’s smartphone, which shows a stereo pair of images.

Example devices: Google Cardboard; Mattel View-Master VR; Oculus Gear VR; Zeiss VR

Augmented-reality system

Augmented-reality system

How it works: The headset superimposes 3-D images and data on the user’s view of the real world.

Example devices: Microsoft HoloLens; Meta

The new wave of VR

In the decades since Sega’s initial experiments, VR technology has come a long way, and has made significant progress on its early shortcomings. “Virtual reality systems today are impressive,” says Gordon Wetzstein of Stanford University. Today, consumers and developers can choose from three basic variations on the theme of stereo-3-D headsets for VR: systems with a dedicated internal screen; headgear that holds a smartphone as the source of the VR input; and augmented reality, in which the headset superimposes 3-D images and data on the user’s view of the real world.

In the first type, typified by the Oculus Rift, internal optics focus the user’s vision onto a screen that’s typically only a few centimeters away from the user’s eyes. Sensors detect user head movements and directions, with the headset using that information to calculate the images displayed on the screens. The headset is connected to a high-performance computer or game console that generates stereo pairs of images, generally at a rate of 90 times per second.

The US$599 Rift headset, which has been eagerly awaited by gamers, began delivery on 28 March, and a demo of the production version (along with 30 games designed for it) was a highlight of the mid-March Game Developer’s Conference. A big question had been had been how much of an advance the new version would be over the Developer Kit 2 (DK2), announced in March 2014 for use in developing software.

DK2 included features that reduced motion blur and jitter, which have been linked to simulator sickness. However, that had not been good enough for many people prone to cyber sickness—such as Whittinghill, who could use it for only a minute and a half before feeling ill. He reports that he suffered no ill effects when he tested the production version at the game conference. “They’re wonderful and I love them,” he says, “and I really look forward to getting my own.”

A key feature of the Rift is an organic LED (OLED) display showing 90 fps, with a resolution of 1080×1200 pixels per eye, which gives a field of view wider than 100 degrees. Audio comes from integrated headphones that provide 3-D sound. A stationary sensing system tracks the user’s position in the room with infrared and optical LEDs that effectively create a grid. Users play the game with handheld controls, and can see the action over a full 360 degrees by turning, with the display matched to head motion. Videos show gamers moving around an open area, turning and gesturing as they play, totally immersed because they can’t see the room.

Two major competitors to Oculus showed full-featured VR headsets with similar hardware at the gaming show. HTC began delivery of its US$799 Vive headset in April; like the Rift, it requires a high-power computer and displays 90 fps. The company has partnered with Valve Software, a gaming specialist, which showed 30 games for the HTC Vive at the gaming show. Sony is taking a slightly different approach, with a VR headset running at 90 or 120 fps on an OLED display and software designed to run on a Playstation 4. The US$499 Playstation 4 VR bundle will begin shipping in October. Sony showed 20 games at the game conference.

Google Cardboard is a simple head-mounted box with focusing lenses. The user’s smartphone acts as both computer and display, serving up a stereo pair of images to create a 3-D view. [©Google]

Smartphone-based headsets

A second flavor of VR headset, the most familiar example of which is Google Cardboard, essentially consists of a head-mounted box with focusing lenses, into which the user inserts a smartphone. The phone serves as both computer and display, showing a stereo pair of images. Other companies besides Google have adopted similar designs; the Mattel toy company, for example, has unveiled something it calls View-Master VR, in homage to the company’s the classic stereo viewer of film disks from the mid-20th century. As with the classic headsets, users see only the screens, not the world around them.

The idea of using smartphones as VR displays came later than stand-alone headsets, but it has a compelling simplicity, and thus viable smartphone-based headsets actually reached the market before dedicated ones. John Quarles of the University of Texas at San Antonio, USA, thinks they may come to dominate the consumer market. “Phones are going to win because everybody already has them.”

Much of the hardware in the two types of headsets is actually similar, says Gordon Wetzstein of Stanford University. The processors, sensors and small high-resolution screens used in dedicated headsets are the same types used in smartphones. But in dedicated headsets, it’s all optimized for VR, while phones are more general-purpose devices. The big question is how many phone-equipped consumers will value the higher performance of a dedicated VR headset enough to pay the price premium.

The savings of bringing your own phone can indeed be huge—enough so that, last November, Google was able to bundle unassembled versions of its Cardboard unit for free with 1.3 million copies of the Sunday New York Times. Prices of Google-certified versions start at US$14.99, with more elaborate versions like Mattel’s View-Master VR retailing at US$29.99. Those are price points cheap enough for schools to afford.

Facebook’s Oculus has its own entry in the smartphone-based headset market, a more elaborate version called the Gear VR that pairs with Samsung’s Galaxy S7 and S7 Edge smartphones. According to Wetzstein, the Gear VR, priced at US$99 and with more than 200 apps and games available, is nearly as good as the Rift DK2. The optics firm Zeiss has developed its own US$99 smartphone-based headset, which can use apps written for Google Cardboard and similar platforms.

One Microsoft marketing pitch for its HoloLens product puts a high-tech spin on a familiar human situation: A father, remotely viewing what his daughter sees, highlights on his tablet where she needs to put a piece, and his instructions show up on her AR headset to guide her through the plumbing repair. [Microsoft HoloLens]

Augmented reality

A third alternative, augmented reality (AR), supplements rather than replaces the user’s view of the local environment, adding computer-generated parts of a virtual world to the real world. The 3-D images are projected onto a transparent screen close to the eyes of the user, who sees them as objects superimposed on the real world. The system tracks the user’s head, to see where they are looking, and their hands, so it can make the image move if the user “touches” it. Users are unlikely to get sick, because most visual cues come from the real world.

(While users wearing headsets see 3-D images floating in space or sitting on surfaces, those without headsets, of course, see nothing, because the images are generated within the headset—a detail that’s unfortunately glossed over in many slick marketing images and videos of AR technology.)

Perhaps the biggest player in AR is Microsoft, whose HoloLens AR headset (which actually contains no true optical holograms) began shipping in a pricey US$3,000 development edition on 30 March. NASA is using Microsoft’s technology for a “Destination Mars” exhibit slated to open summer 2016 at the Kennedy Space Center at Cape Canaveral, Fla., USA. The low latency of a Microsoft demonstration last year “really impressed” Quarles, but the headset had a narrow field of view and needed dim light. (A much-publicized startup named Magic Leap appears to be doing something similar, but would not discuss details.)

Potential AR applications are evolving along with the technology. Developers are exploring business applications, such visual displays for collaborative design. Quarles suggests using AR for repairs and maintenance—instead of going back and forth to a manual or video for repair instructions, you could wear a headset that shows you each step as an overlay on what you’re fixing.

Refining the technology

Whether the potential use is in business, education or gaming, developers are working on refinements to enhance the performance of VR optics, reduce the likelihood of simulator sickness and extend the range of applications.

One focus is modifying the VR background to reduce the troublesome discord between vision and the vestibular system. Noting that users had less sickness in systems with fixed reference points, such as a cockpit in a flight simulator, Whittinghill decided to add a virtual nose. “Almost every single terrestrial predator can see their nose,” he says. Suspecting that trait had evolved because it benefited the beasts, he added a nose to his VR system—and found that users not only didn’t seem to notice the addition, but tended to report less severe sickness. He’s planning follow-up experiments to investigate further.

Wetzstein, meanwhile, is trying to reduce the sensory discord between eye accommodation and the vergence produced by stereo images. He has developed a headset with a pair of LCD screens stacked between the eye and a single backlight. The light pattern formed on the screen closest to the backlight is effectively multiplied by the pattern on the front screen in a way that can change the focusing of the eye, a process he calls light field factorization. So far he has demonstrated the concept, but it requires intensive computation to work.

Progress on the key issue of reducing latency was described by Peter Lincoln of the University of North Carolina, Chapel Hill, USA, at the IEEE Virtual Reality Conference. Using a conventional graphics processor, he demonstrated end-to-end latency of only 80 microseconds, compared with roughly 11 milliseconds in the production version of the Oculus Rift. So far he has produced only grayscale images with a bulky monocular laboratory system, but his results point to the long-term possibility of much lower latency than today’s systems.

David Whittinghill of Purdue University found that adding a “virtual nose” to a VR system seemed to reduce reports of sickness from users. The viewer’s eyes fuse the stereo pair to form a 3-D image. [© Purdue]

The (unpredictable) human side

In these ways and others, researchers in VR are attempting to address the major challenge from the human sensory system—the result of millions of years of evolution. Motion sickness, cyber sickness and simulator sickness are all variations on the same theme, and their effects tend to grow worse the longer one uses the equipment.

One particular challenge in creating a technical fix to these human reactions is that susceptibility, as well as the ability to accommodate after repeated exposures, varies widely among individuals—and is hard to predict. Military studies going back decades show that most pilot trainees can accommodate to flight simulators. Gamers also tend not to suffer much cyber sickness. But both tend to be highly motivated to learn to tolerate cyber effects; more casual users may never come back after a bad experience.

Long-duration exposures produce another problem—the body adjusts to the simulator and has to readjust to reality. Pilots emerging from long simulator sessions are urged to take time to readapt before walking down stairs or driving home. Gamers also can have problems readjusting. Quarles compares the adaptation to scuba diving, where the body adapts to the feeling of weightlessness, but after a 45-minute dive finds the legs very heavy when getting out of the water.

Ongoing research is trying to unravel the causes of the effects. In recent experiments, Shawn Green and Bas Rokers of the University of Wisconsin in Madison found that the better people could discriminate 3-D motion in the real world, the more likely they were to get sick in the virtual one using the Oculus DK2 headset. (That’s an ironic finding, as it means the people most likely to benefit from using VR are the ones most likely to be nauseated by it.) Green and Rokers also are exploring how head motion, used by pigeons and cats to estimate distance, affects human perception. Their goal is to help people learn how to discount errant cues, because VR technology can’t avoid all sensory mismatches.

Like 3-D films that drop monsters into moviegoers’ laps, games that send you soaring through space when seated in an armchair can trouble the tummy. So far, new technology—particularly shorter latency times and higher-resolution displays—seems to be easing the troublesome sensory discord. Careful choice of content by designers can also help to head off clashes between sensory inputs. And it will be fascinating to see if the careful combination of a careening roller coaster and synchronized virtual reality can cancel out the conflicting cues, and create a thrilling ride worth repeating.

Jeff Hecht is an OSA Senior Member and freelance writer who covers science and technology.

References and Resources

-

http://spectrum.ieee.org/geek-life/reviews/virtual-reality-roller-coasters-are-here-and-everywhere

-

J. Hecht. “3-D TV and Movies,” Opt. Photon. News 22(2), 20 (February 2011).

-

www.slashgear.com/meta-2-augmented-reality-headset-dev-kit-release-the-oculus-of-ar-02430020/

-

B. Allen et al. “Visual 3D motion acuity predicts discomfort in 3D stereoscopic environments,” Entertainment Computing (2016),

doi: http://dx.doi.org/10.1016/j.entcom.2016.01.001